- Home

- About Journals

-

Information for Authors/ReviewersEditorial Policies

Publication Fee

Publication Cycle - Process Flowchart

Online Manuscript Submission and Tracking System

Publishing Ethics and Rectitude

Authorship

Author Benefits

Reviewer Guidelines

Guest Editor Guidelines

Peer Review Workflow

Quick Track Option

Copyediting Services

Bentham Open Membership

Bentham Open Advisory Board

Archiving Policies

Fabricating and Stating False Information

Post Publication Discussions and Corrections

Editorial Management

Advertise With Us

Funding Agencies

Rate List

Kudos

General FAQs

Special Fee Waivers and Discounts

- Contact

- Help

- About Us

- Search

The Open Cybernetics & Systemics Journal

(Discontinued)

ISSN: 1874-110X ― Volume 12, 2018

Block Compressive Sensing Algorithm Based on Interleaving Extraction in Contourlet Domain

Hongbo Bi1, 2, *, Ying Liu1, Mengmeng Wu1, Yubo Zhang1

Abstract

We propose a block image compressive sensing algorithm based on interleaving extraction in Contourlet domain to improve the performance of image sparse representation and quality of reconstructed images. First, we propose the interleaving extraction scheme and partition an image into several sub-images using interleaving extraction. Second, we represent the sub-images in Contourlet domain and measure Contourlet sub-band coefficient matrices using different dimensional Gaussian random matrices. Finally, we rebuild the sub-band coefficients with the orthogonal matching pursuit algorithm and conduct Contourlet inverse transform to reconstruct the original images. Experimental results show that the subjective visual effect and peak signal to noise ratio of the proposed algorithm are superior to those of the original compressive sensing algorithms under the same sampling rate.

Article Information

Identifiers and Pagination:

Year: 2016Volume: 10

First Page: 218

Last Page: 227

Publisher Id: TOCSJ-10-218

DOI: 10.2174/1874110X01610010218

Article History:

Received Date: 29/01/2016Revision Received Date: 02/09/2016

Acceptance Date: 20/09/2016

Electronic publication date: 08/11/2016

Collection year: 2016

open-access license: This is an open access article licensed under the terms of the Creative Commons Attribution-Non-Commercial 4.0 International Public License (CC BY-NC 4.0) (https://creativecommons.org/licenses/by-nc/4.0/legalcode), which permits unrestricted, non-commercial use, distribution and reproduction in any medium, provided the work is properly cited.

* Address correspondence to this author at the Northeast Petroleum University No. 199 Fazhan Road, High-Tech district, Daqing, Heilongjiang Province, China; Tel: 08613664590305; E-mails: bihongbo@hotmail.com, bhbdq@126.com

| Open Peer Review Details | |||

|---|---|---|---|

| Manuscript submitted on 29-01-2016 |

Original Manuscript | Block Compressive Sensing Algorithm Based on Interleaving Extraction in Contourlet Domain | |

1. INTRODUCTION

In 2006, Donoho and Candès developed compressive sensing (CS) theory from signal sparse decomposition and approximation theory. CS can reconstruct the original signal with high quality by obtaining limited measurement data, which provides a new solution to image compression [1E.J. Candès, "Compressive sampling", In: Proceedings on the International Congress of Mathematicians, vol. 3. Madrid, 2006, pp. 1433-1452.]. CS achieves original signal sampling and compression simultaneously, avoiding resource waste of Shannon’s theorem through traditional data processing method: first sampling and then compressing. As a result, CS greatly reduces signal sampling frequency, signal processing time, computational expense, and data storage cost [2E.J. Candès, and M.B. Wakin, "An introduction to compressive sampling", IEEE Signal Process. Mag., vol. 25, no. 2, pp. 21-30, 2008.

[http://dx.doi.org/10.1109/MSP.2007.914731] ].

According to CS theory, the sparse representation, measurement matrix, and reconstruction algorithm are the three key elements in reconstructing the original signal from the sparse signal with high probability [3X. Li, A. Rueetschi, A. Scaglione, and Y.C. Eldar, "Compressive link acquisition in multiuser communications", IEEE Trans. Signal Process., vol. 61, no. 12, pp. 3229-3245, 2013.

[http://dx.doi.org/10.1109/TSP.2013.2258014] ]. Currently, the commonly used sparse representation methods include discrete cosine transform (DCT), discrete wavelet transform (DWT), multi-scale geometric analysis, and redundant dictionaries [4J. Ma, "Improved iterative curvelet thresholding for compressed sensing and measurement", IEEE Trans. Instrum. Meas., vol. 60, no. 1, pp. 126-136, 2011.

[http://dx.doi.org/10.1109/TIM.2010.2049221] ]. DCT demonstrates poor time-frequency analytical performance; DWT experiences difficulty in reflecting image edge information accurately, and thus unable to meet the requirement of image sparse representation. In 2002, Do and Vetterli proposed Contourlet transform. Contourlet transform can represent the anisotropic characteristics and approximate singular curve of an image with minimal coefficients, effectively solving the sparse representation problem of high-dimensional space data. The image representation performance of Contourlet transform is better than most widely used wavelet transform methods, compensating for the lack of multi-directional wavelets [5MN Do, and M Vetterli, "Contourlets: a new directional mutliresolution image represention, Signal", Syst. Comput., pp. 497-501, 2002. Jan. 2002, 6M.N. Do, and M. Vetterli, "The contourlet transform: an efficient directional multiresolution image representation", IEEE Trans. Image Process., vol. 14, no. 12, pp. 2091-2106, 2005.

[http://dx.doi.org/10.1109/TIP.2005.859376] [PMID: 16370462] ].

The CS reconstruction algorithm can be divided into three categories: convex relaxation method, greedy algorithm, and combinational algorithm. Convex relaxation algorithms include basis pursuit, interior-point method, gradient projection, and iterative threshold algorithm [7S. Chen, D. Donoho, and M. Saunders, "Atomic decomposition by basis pursuit", SIAM J. Sci. Comput., vol. 20, no. 1, pp. 33-61, 1998.

[http://dx.doi.org/10.1137/S1064827596304010] , 8M. Figueiredo, R.D. Nowak, and S.J. Wright, "Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems", J. Sel. Top. Sig. Process., vol. 1, no. 4, pp. 586-598, 2007.]. Greedy algorithms include matching pursuit (MP), orthogonal MP (OMP), and regularized OMP [9D. Needell, and R. Vershynin, "Uniform uncertainty principle and signal recovery via regularized orthogonal matching pursuit", Found. Comput. Math., vol. 9, no. 3, pp. 317-334, 2009.

[http://dx.doi.org/10.1007/s10208-008-9031-3] ]. Combinational algorithms include Fourier sampling and chain tracking [10A.C. Gilbert, S. Muthukrishnan, and M.J. Strauss, "Improved time bounds for near-optimal sparse Fourier representation", Int. Soc. Optical Engi., vol. 5914, 2005pp. 1-15 Bellingham WA

[http://dx.doi.org/10.1117/12.615931] ]. Among these algorithms, greedy algorithm is the most widely used. To the best of our knowledge, most current improvements on CS are based on greedy algorithm, and research results of Contourlet transform applied to CS have not been published. Inspired by wavelet compressive sensing, we use Contourlet transform to realize the sparse representation of images [11Liao Bin, and Lv. Jintao, "A novel watermark embedding scheme using compressive sensing in wavelet domain", The Open Cyber. Sys. J., pp. 1-6, 2015. Sep]. The high frequency sub-bands corresponding to Contourlet decomposition are orthogonal and exhibit good non-correlation with the measurement matrix, further improving the accuracy of image reconstruction.

In this study, we propose a new image reconstruction algorithm based on Contourlet transform and interleaving extraction. We also adopt the traditional OMP reconstruction algorithm. The experimental results, based on subjective and objective evaluation indicators, confirm the advantages of our proposed algorithm.

The rest of the work is organized as follows. Section 2 introduces related work on CS theory. Section 3 describes the proposed scheme. Section 4 analyzes the experimental results. Finally, Section 5 presents the conclusion.

2. RELATED WORK ON COMPRESSIVE SENSING

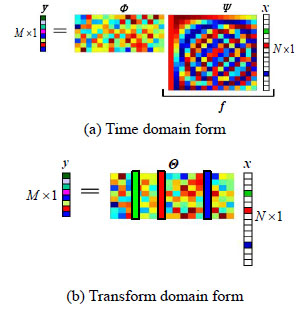

2.1. Encoding Observations and Measurements

Assuming that N × 1 signal f ϵ RN is not sparse in the time domain, the linear observation process is considered as y = ϕ f, where ϕ is a M × N matrix (M < N). If f is sparse under a set of orthogonal bases, then according to the formula f = ψ x, where ψ is the dimension of N × N orthogonal matrix, the sparse form or approximate sparse form of the transform domain coefficient x replaces the time domain form, f and y can be expressed as follows:

|

(1) |

In Formula (1), the CS matrix Θ = ϕψ is a M × N matrix, and the observation vector y is linearly superimposed by the signal sparse value in Θ [12J.C. Ferreira, "Quantization noise on image reconstruction using model-based compressive sensing", IEEE Latin America T., vol. 13, no. 4, pp. 1167-1177, 2015.

[http://dx.doi.org/10.1109/TLA.2015.7106372] , 13A. Maronidis, E. Chatzilari, S. Nikolopoulos, and I. Kompatsiaris, "Smart Sampling and Optimal Dimensionality Reduction of Big Data Using Compressed Sensing", In: Big Data Optimization: Recent Developments and Challenges., Springer International Publishing: NewYork, USA, 2016, pp. 251-280.

[http://dx.doi.org/10.1007/978-3-319-30265-2_12] ]. Fig. (1 ) shows the basic CS encoding steps.

) shows the basic CS encoding steps.

|

Fig. (1) Schematic of CS encoding. |

We denote ϕ as the measurement matrix and ψ as the sparse decomposition matrix. The form of ϕ exists independently of signal. f . ψ is composed of any set of orthogonal bases or compact frame. The set of orthogonal bases decomposes signal f into a sparse form and is only related to the reconstruction of the signal.

To ensure observed signals exist in a sufficiently sparse transform domain and then obtain CS measurements, we must determine a set of base vectors representing the original signal in sparse form [14A. Gholami, "Residual statics estimation by sparsity maximization", Geophysics, vol. 78, no. 1, pp. V11-V19, 2013.

[http://dx.doi.org/10.1190/geo2012-0035.1] ]. Considering the optimal performance of Contourlet transform in image sparse representation, we decide to realize image sparse decomposition in the Contourlet domain.

According to Formula (1), the CS matrix Θ ensures that x with length N is recoverable by M (M < N) observed values of y. Cand's and Tao introduced the concept of “restricted isometry property” (RIP). RIP explains that to completely reconstruct the original signal, the observations should not map two different sparse signals to the same observation set [15G. Yu, and G. Sapiro, "Statistical compressed sensing of Gaussian mixture models", IEEE Trans. Signal Process., vol. 59, no. 12, pp. 5842-5858, 2011.

[http://dx.doi.org/10.1109/TSP.2011.2168521] ].

The Gaussian measurement matrix ϕ and ψ = 1 are not correlated. Once ϕ is selected, regardless of what orthogonal matrix ψ is, the CS matrix will be an independent, identically distributed Gaussian matrix, with a high probability of meeting RIP [16F. Renna, R. Calderbank, L. Carin, and M. R. D. Rodrigues, "Reconstruction of signals drawn from a Gaussian mixture via noisy compressive measurements", IEEE Trans. Signal Process., vol. 62, no. 9, pp. 2265-2277, 2014. May

[http://dx.doi.org/10.1109/TSP.2014.2309560] ]. As a result, we select the Gaussian random matrix as the measurement matrix.

2.2. Decoding Reconstruction

For sparse coefficient vector x, if, Θx = y then for any vector r(Θr = 0) located in empty set space N(Θ) of Θ, all will have Θ (x + r)= y. There should be infinite numbers of solution x' that satisfies Θx' = y in Formula (1) when M < N. The reconstruction algorithm aims to find the sparsest coefficient vector x of the signal in the solution set space H = N (Θ) + x . We apply the optimization algorithm to find the solution that best meets sparse conditions. The lp norm of vector is defined x as:

|

(2) |

From the perspective of energy, l2 norm minimization finds the minimum energy solution in many possible forms of signals. However, signal energy is spread over the entire range, which is undesirable form of reducing energy [17X. Liao, H. Li, and L. Carin, "Generalized alternating projection for weighted-l2,1 minimization with applications to model-based compressive sensing", SIAM J. Imaging Sci., vol. 7, no. 2, pp. 797-823, 2014.

[http://dx.doi.org/10.1137/130936658] ]. In this study, we use l1 norm minimization to reconstruct the original signal.

Based on l1 norm minimization, the optimization equation is as follows:

|

(3) |

It is a convex optimization problem that can accurately recover the sparse and approximate compressible signals. The convex relaxation method solves the l1 norm minimization problem. In reconstruction, the number of observed values of the algorithm is the least, whereas the accuracy and computational complexity are the highest. The greedy algorithm, which is the most widely used currently, is also used to solve l1 norm minimization problem. This algorithm is between the convex relaxation method and combinatorial algorithm in operational efficiency, demand for observation values, and reconstruction precision [18W.J. Lin, Reconstruction algorithm for compressive sensing and their applications to digital watermarking., Jiaotong University: Beijing, 2011., 19M.F. Duarte, and Y.C. Eldar, "Structured compressive sensing: From theory to applications", IEEE Trans. Signal Process., vol. 59, no. 9, pp. 4053-4085, 2011.

[http://dx.doi.org/10.1109/TSP.2011.2161982] ].

3. PROPOSED ALGORITHM

According to image sparse representation in Contourlet transform domain combined with blocking, we propose a CS algorithm based on interleaving extraction in the Contourlet domain. The proposed algorithm initially decomposes the image into several sub-images evenly by interleaving extraction. The algorithm then decomposes each sub-image by Contourlet transform and selects Gaussian random matrix as the measurement matrix to obtain measured values. Finally, the image is reconstructed by OMP. Fig. (2 ) shows the algorithm flow chart.

) shows the algorithm flow chart.

|

Fig. (2) Flow chart of the proposed scheme. |

3.1. Block Compressive Sensing

The traditional CS method encounters several problems, such as high computational complexity, large memory size of compression sampling operator, and long reconstruction time. Block-based compressive sampling (BCS) can achieve better performance compromise and is widely applied in image acquisition. The computation of BCS is significantly less than that of traditional CS.

Consider an image l with size lr × lc of total pixels N = lr × lc, we aim to obtain M measurement values. The image is divided into small B × B pieces in BCS. xi represents the vector form of block i. Then, the corresponding observed value yi is expressed as

|

(4) |

where ϕB is a m × B2 matrix and

. For the entire image, the measurement matrix ϕ is a block diagonal matrix with the following form [20M. Anastasios, and C. Elisavet, "Scalable image annotation using a product compressive sampling approach", Data Sci. Adv. Analyt., 2016. Jun]:

. For the entire image, the measurement matrix ϕ is a block diagonal matrix with the following form [20M. Anastasios, and C. Elisavet, "Scalable image annotation using a product compressive sampling approach", Data Sci. Adv. Analyt., 2016. Jun]:

|

(5) |

In BCS algorithm, the m × B2 matrix ϕB needs to be stored, whereas the M × N matrix ϕ does not. When B is smaller, the storage space is smaller and is also achieved more quickly. By contrast, when is B larger, the reconstruction result is better. Based on experience, B = 32 is generally taken as the size of the block.

3.2. Interleaving Extraction

The BCS algorithm can greatly reduce computations with the reduced image block. However, BCS itself has many shortcomings. Despite a great advantage in the reconstruction speed, image quality declines [21J.E. Fowler, S. Mun, and E.W. Tramel, "Block-based compressed sensing of images and video", Found. Trends Signal Process, vol. 4, no. 4, pp. 297-416, 2012.

[http://dx.doi.org/10.1561/2000000033] ].

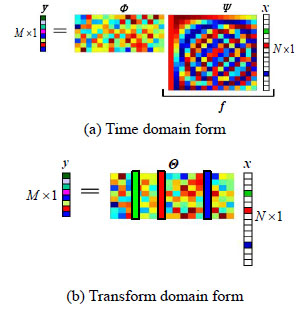

The traditional block method reduces the quality of the reconstructed image to a certain extent, whereas interleaving extraction assigns any adjacent pixels in the image to different descriptions. Given the smooth gray variation in the adjacent pixels in natural images, each sub-image formed by interleaving extraction is of the same importance, and the correlation between each other is strong, which can be used to describe the original image [22W. Liu, and C. Zhao, "Image multiple description coding method based on interleaving extraction and block compressive sensing strategy", J. Electron. Inf. Tech., vol. 33, no. 2, pp. 461-465, 2011.]. As an example, Fig. (3 ) shows the 2-Extraction, 4-Description using down-sampling decimation mode.

) shows the 2-Extraction, 4-Description using down-sampling decimation mode.

|

Fig. (3) Image division by interleaving extraction. |

Fig. (3 ) clearly shows that 2-Extraction generates a sub-image by extracting a pixel by every two rows and two columns. By analogy, n-Extraction extracts a pixel by every n rows and n columns. The procedure is equivalent to dividing the image into non-overlapping pieces of size n × n, then sequentially assigning pixels to the sub-images. As a result, each image is a description. Fig. (4

) clearly shows that 2-Extraction generates a sub-image by extracting a pixel by every two rows and two columns. By analogy, n-Extraction extracts a pixel by every n rows and n columns. The procedure is equivalent to dividing the image into non-overlapping pieces of size n × n, then sequentially assigning pixels to the sub-images. As a result, each image is a description. Fig. (4 ) shows the result of interleaving extraction for the lena.bmp image. The image has a size of 256*256. Each sub-image clearly describes the original image.

) shows the result of interleaving extraction for the lena.bmp image. The image has a size of 256*256. Each sub-image clearly describes the original image.

|

Fig. (4) 2-Extraction, 4-Description effect of interleaving extraction. |

3.3. Contourlet Transform

Contourlet transform is a multi-resolution, localized, and multi-directional real two-dimensional image representation method. The support section of the base has a long strip structure. The aspect ratio of the strip structure changes based on scale, which is similar to the shape of the contour, and has an anisotropic scale, as shown in Fig. (5 ). Among Contourlet coefficients, the energy of coefficients representing image edge is more concentrated, that is, the Contourlet transform for the curve exhibits a more sparse expression.

). Among Contourlet coefficients, the energy of coefficients representing image edge is more concentrated, that is, the Contourlet transform for the curve exhibits a more sparse expression.

|

Fig. (5) Curve description of Contourlet transform. |

Contourlet transform separately analyzes multi-scale and direction. First, multi-scale analysis is performed using Laplacian Pyramid (LP) to identify singular points. The singular points are then synthesized by directional filter bank (DFB) in the same direction as a factor to capture high frequency components. Fig. (6 ) shows the flow chart of Contourlet transform. LP also functions to avoid “leakage” of low frequency components because the directional filter itself is unsuitable for processing the low frequency part of the image. First, the original image is decomposed by LP to obtain low and high frequency images at each level. Then, LP decomposes the low frequency image, and the high frequency image is sent to DFB to obtain the sub-band information of each direction. Contourlet transform is different from other analytical methods because it features different numbers of decomposition directions at different scales. For example, the number of sub-bands of each level of decomposition is fixed in wavelet decomposition with only three directional sub-bands: horizontal, vertical, and diagonal. However, in Contourlet decomposition, the number of the sub bands in each level is exponentially two, and the number of the sub-bands varies.

) shows the flow chart of Contourlet transform. LP also functions to avoid “leakage” of low frequency components because the directional filter itself is unsuitable for processing the low frequency part of the image. First, the original image is decomposed by LP to obtain low and high frequency images at each level. Then, LP decomposes the low frequency image, and the high frequency image is sent to DFB to obtain the sub-band information of each direction. Contourlet transform is different from other analytical methods because it features different numbers of decomposition directions at different scales. For example, the number of sub-bands of each level of decomposition is fixed in wavelet decomposition with only three directional sub-bands: horizontal, vertical, and diagonal. However, in Contourlet decomposition, the number of the sub bands in each level is exponentially two, and the number of the sub-bands varies.

|

Fig. (6) Basic flow of Contourlet transform. |

Fig. (7 ) shows the three levels of Contourlet transform decomposition. For the original image, the first layer is wavelet decomposition, obtaining information of four direction sub-bands. In the second layer, DFB filters eight directions, obtaining information of eight direction sub-bands. In the third layer, the number of directions is sixteen, obtaining information of 16 direction sub-bands.

) shows the three levels of Contourlet transform decomposition. For the original image, the first layer is wavelet decomposition, obtaining information of four direction sub-bands. In the second layer, DFB filters eight directions, obtaining information of eight direction sub-bands. In the third layer, the number of directions is sixteen, obtaining information of 16 direction sub-bands.

|

Fig. (7) Three-level Contourlet transform decomposition diagram. |

3.4. Algorithm Steps

The proposed algorithm includes the following steps:

- Divide the original image into several sub-images by interleaving extraction.

- Use the Contourlet transform on each sub-image to obtain the Contourlet coefficients.

- Transpose part of the matrices whose column number is greater than the number of the rows, making the line number greater than or equal to the column number of the coefficient matrices.

- Select Gaussian random matrices of different dimensions to measure the signals in step (2) according to the dimensions of the coefficient matrices.

- Reconstruct the measured matrices using the OMP method.

- Transpose the transposed matrices in step (3) again after reconstruction.

- Obtain the coefficients of the reconstructed image by Contourlet inverse transform.

- Reassign the reconstructed coefficients to each sub-image using the reverse process of interleaving extraction.

4. SIMULATION RESULTS AND ANALYSIS

Numerous simulation experiments have been carried out in this study. The LP of Contourlet transform selects the “9-7” pyramid filter. The “9-7” filter is more suitable for image processing because of its linear phase and the approximately orthogonal characteristics. The “pkva” directional filter is selected as the DFB of Contourlet. Test images are selected from the standard image test datasets, and the size is 512×512 pixels. The input image is decomposed into four layers, and the numbers of the directions of each layer are 1, 4, 8, 16, adopting 2-Extraction, 4-Description. Image reconstruction quality is evaluated by peak signal to noise ratio (PSNR). PSNR is calculated by Formula (6). The sampling rate is 0.5. The results are the averaged values of repeated program runs.

|

(6) |

where,

, f' is the reconstructed image and f is the original image.

, f' is the reconstructed image and f is the original image.

To verify the universal applicability of the proposed algorithm, 10 512×512 pixel images are selected for sparse decomposition and reconstruction. Given the limited space, only partial results are shown in Fig. (8 ) and Table 1.

) and Table 1.

|

Fig. (8) Original and reconstructed image 'Lena' with different algorithms. |

The above experimental results demonstrate that the quality of the reconstructed image using the proposed algorithm is significantly better than that of traditional OMP, BCS_OMP algorithms. The proposed algorithm is applicable to images with different details. At present, the reported average PSNR of the reconstruction algorithm is within the range of 33–37 dB. Thus, the proposed algorithm provides better performance. Computational time complexity and memory requirement are not obviously improved.

CONCLUSION

We first introduce the basic principles of CS theory. Considering the original CS and BCS algorithms and the characteristics of Contourlet transform coefficients of the image, we propose a new CS algorithm based on interleaving extraction in the Contourlet domain. We perform blocking using interleaving extraction. Our blocking strategy ensures that the complexity of the observation process does not change with the image size. The proposed algorithm is simple in structure, easy to implement, and suitable for processing high-resolution images. We realize sparse image representation by Contourlet transformation. The detailed texture information of the image is effectively reconstructed by CS image reconstruction, relatively improving the visual effect of the image. Simulation experiments with different test images demonstrate that the objective evaluation index of PSNR is greatly improved, providing a new application for Contourlet transform in CS.

CONFLICT OF INTEREST

The authors confirm that this article content has no conflict of interest.

ACKNOWLEDGEMENTS

This work is supported by the Heilongjiang Provincial Natural Science Foundation for Young Scholars under Grant No. QC2014C066.

REFERENCES

| [1] | E.J. Candès, "Compressive sampling", In: Proceedings on the International Congress of Mathematicians, vol. 3. Madrid, 2006, pp. 1433-1452. |

| [2] | E.J. Candès, and M.B. Wakin, "An introduction to compressive sampling", IEEE Signal Process. Mag., vol. 25, no. 2, pp. 21-30, 2008. [http://dx.doi.org/10.1109/MSP.2007.914731] |

| [3] | X. Li, A. Rueetschi, A. Scaglione, and Y.C. Eldar, "Compressive link acquisition in multiuser communications", IEEE Trans. Signal Process., vol. 61, no. 12, pp. 3229-3245, 2013. [http://dx.doi.org/10.1109/TSP.2013.2258014] |

| [4] | J. Ma, "Improved iterative curvelet thresholding for compressed sensing and measurement", IEEE Trans. Instrum. Meas., vol. 60, no. 1, pp. 126-136, 2011. [http://dx.doi.org/10.1109/TIM.2010.2049221] |

| [5] | MN Do, and M Vetterli, "Contourlets: a new directional mutliresolution image represention, Signal", Syst. Comput., pp. 497-501, 2002. Jan. 2002 |

| [6] | M.N. Do, and M. Vetterli, "The contourlet transform: an efficient directional multiresolution image representation", IEEE Trans. Image Process., vol. 14, no. 12, pp. 2091-2106, 2005. [http://dx.doi.org/10.1109/TIP.2005.859376] [PMID: 16370462] |

| [7] | S. Chen, D. Donoho, and M. Saunders, "Atomic decomposition by basis pursuit", SIAM J. Sci. Comput., vol. 20, no. 1, pp. 33-61, 1998. [http://dx.doi.org/10.1137/S1064827596304010] |

| [8] | M. Figueiredo, R.D. Nowak, and S.J. Wright, "Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems", J. Sel. Top. Sig. Process., vol. 1, no. 4, pp. 586-598, 2007. |

| [9] | D. Needell, and R. Vershynin, "Uniform uncertainty principle and signal recovery via regularized orthogonal matching pursuit", Found. Comput. Math., vol. 9, no. 3, pp. 317-334, 2009. [http://dx.doi.org/10.1007/s10208-008-9031-3] |

| [10] | A.C. Gilbert, S. Muthukrishnan, and M.J. Strauss, "Improved time bounds for near-optimal sparse Fourier representation", Int. Soc. Optical Engi., vol. 5914, 2005pp. 1-15 Bellingham WA [http://dx.doi.org/10.1117/12.615931] |

| [11] | Liao Bin, and Lv. Jintao, "A novel watermark embedding scheme using compressive sensing in wavelet domain", The Open Cyber. Sys. J., pp. 1-6, 2015. Sep |

| [12] | J.C. Ferreira, "Quantization noise on image reconstruction using model-based compressive sensing", IEEE Latin America T., vol. 13, no. 4, pp. 1167-1177, 2015. [http://dx.doi.org/10.1109/TLA.2015.7106372] |

| [13] | A. Maronidis, E. Chatzilari, S. Nikolopoulos, and I. Kompatsiaris, "Smart Sampling and Optimal Dimensionality Reduction of Big Data Using Compressed Sensing", In: Big Data Optimization: Recent Developments and Challenges., Springer International Publishing: NewYork, USA, 2016, pp. 251-280. [http://dx.doi.org/10.1007/978-3-319-30265-2_12] |

| [14] | A. Gholami, "Residual statics estimation by sparsity maximization", Geophysics, vol. 78, no. 1, pp. V11-V19, 2013. [http://dx.doi.org/10.1190/geo2012-0035.1] |

| [15] | G. Yu, and G. Sapiro, "Statistical compressed sensing of Gaussian mixture models", IEEE Trans. Signal Process., vol. 59, no. 12, pp. 5842-5858, 2011. [http://dx.doi.org/10.1109/TSP.2011.2168521] |

| [16] | F. Renna, R. Calderbank, L. Carin, and M. R. D. Rodrigues, "Reconstruction of signals drawn from a Gaussian mixture via noisy compressive measurements", IEEE Trans. Signal Process., vol. 62, no. 9, pp. 2265-2277, 2014. May [http://dx.doi.org/10.1109/TSP.2014.2309560] |

| [17] | X. Liao, H. Li, and L. Carin, "Generalized alternating projection for weighted-l2,1 minimization with applications to model-based compressive sensing", SIAM J. Imaging Sci., vol. 7, no. 2, pp. 797-823, 2014. [http://dx.doi.org/10.1137/130936658] |

| [18] | W.J. Lin, Reconstruction algorithm for compressive sensing and their applications to digital watermarking., Jiaotong University: Beijing, 2011. |

| [19] | M.F. Duarte, and Y.C. Eldar, "Structured compressive sensing: From theory to applications", IEEE Trans. Signal Process., vol. 59, no. 9, pp. 4053-4085, 2011. [http://dx.doi.org/10.1109/TSP.2011.2161982] |

| [20] | M. Anastasios, and C. Elisavet, "Scalable image annotation using a product compressive sampling approach", Data Sci. Adv. Analyt., 2016. Jun |

| [21] | J.E. Fowler, S. Mun, and E.W. Tramel, "Block-based compressed sensing of images and video", Found. Trends Signal Process, vol. 4, no. 4, pp. 297-416, 2012. [http://dx.doi.org/10.1561/2000000033] |

| [22] | W. Liu, and C. Zhao, "Image multiple description coding method based on interleaving extraction and block compressive sensing strategy", J. Electron. Inf. Tech., vol. 33, no. 2, pp. 461-465, 2011. |